Qdrant supports ARM architecture!

Kacper Łukawski

·September 21, 2022

On this page:

The processor architecture is a thing that the end-user typically does not care much about, as long as all the applications they use run smoothly. If you use a PC then chances are you have an x86-based device, while your smartphone rather runs on an ARM processor. In 2020 Apple introduced their ARM-based M1 chip which is used in modern Mac devices, including notebooks. The main differences between those two architectures are the set of supported instructions and energy consumption. ARM’s processors have a way better energy efficiency and are cheaper than their x86 counterparts. That’s why they became available as an affordable alternative in the hosting providers, including the cloud.

In order to make an application available for ARM users, it has to be compiled for that platform. Otherwise, it has to be emulated by the device, which gives an additional overhead and reduces its performance. We decided to provide the Docker images targeted especially at ARM users. Of course, using a limited set of processor instructions may impact the performance of your vector search, and that’s why we decided to test both architectures using a similar setup.

Test environments

AWS offers ARM-based EC2 instances that are 20% cheaper than the x86 corresponding alternatives with a similar configuration. That estimate has been done for the eu-central-1 region (Frankfurt) and R6g/R6i instance families. For the purposes of this comparison, we used an r6i.large instance (Intel Xeon) and compared it to r6g.large one (AWS Graviton2). Both setups have 2 vCPUs and 16 GB of memory available and these were the smallest comparable instances available.

The results

For the purposes of this test, we created some random vectors which were compared with cosine distance.

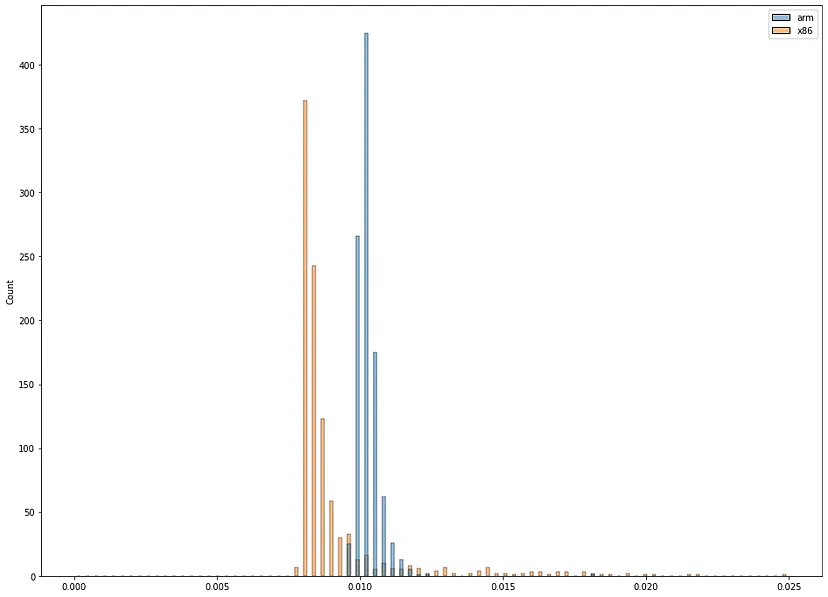

Vector search

During our experiments, we performed 1000 search operations for both ARM64 and x86-based setups. We didn’t measure the network overhead, only the time measurements returned by the engine in the API response. The chart below shows the distribution of that time, separately for each architecture.

It seems that ARM64 might be an interesting alternative if you are on a budget. It is 10% slower on average, and 20% slower on the median, but the performance is more consistent. It seems like it won’t be randomly 2 times slower than the average, unlike x86. That makes ARM64 a cost-effective way of setting up vector search with Qdrant, keeping in mind it’s 20% cheaper on AWS. You do get less for less, but surprisingly more than expected.