How Fieldy AI Achieved Reliable AI Memory with Qdrant

Daniel Azoulai

·September 04, 2025

On this page:

Fieldy AI’s migration to Qdrant: Building a fault-tolerant AI memory platform

Capturing and retrieving a lifetime of conversations

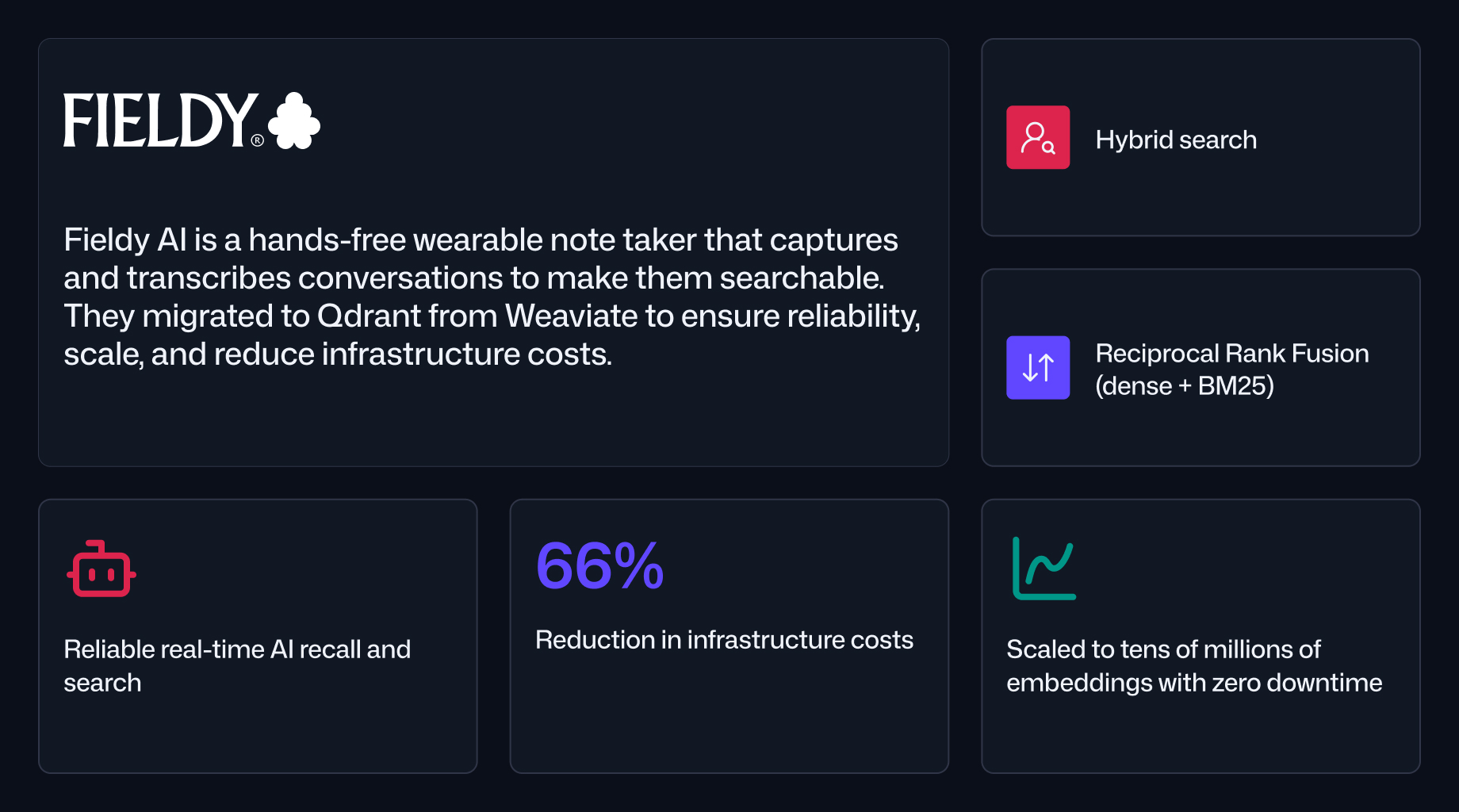

Fieldy is a hands-free wearable AI note taker that continuously records, transcribes, and organizes real-world conversations into your personal, searchable memory. The system’s goal is simple in concept but demanding in execution: capture every relevant spoken interaction, transcribe it with high accuracy, and make it instantly retrievable. This requires a robust ingestion pipeline, a scalable vector search layer, and a retrieval process capable of handling growing volumes of multimodal data without introducing latency or errors.

From the start, the engineering team treated transcription reliability as the primary design constraint. If a conversation is not captured in the moment, it cannot be reconstructed later. This applies equally to Bluetooth transfer from the AI wearable pendant to the app, HTTPS uploads to the backend, speech-to-text transcription, embedding generation, and vector database ingestion. Every component had to meet this standard.

How Fieldy AI differentiates in a crowded market

Fieldy’s multilingual, real-time transcription and instant recall make it a trusted voice recorder for professionals, healthcare providers, and anyone needing memory support, including those with ADHD. The engineering team is built to iterate quickly on quality and features in this fast-moving space. They maintain a direct feedback loop with users, enabling rapid prioritization of features that matter most in real usage. Finally, Fieldy’s multilingual transcription enables high-accuracy transcription in over 100 languages, using custom speech-to-text pipelines and continuous evaluation.

Fieldy’s device

Reliability challenges with the initial vector database

Fieldy’s original architecture used Weaviate for vector storage and retrieval. Both the hosted and self-hosted deployments experienced persistent operational issues, most notably 5xx errors affecting roughly 10% of requests. These failures occurred both during ingestion and search, undermining the product’s promise of complete and accessible memory. Attempts to address the problem, including migrating to self-hosted infrastructure, provided only temporary relief before the errors returned months later.

For the engineering team, these failures had two serious implications. First, missing data meant a permanent loss of user trust. Second, time spent debugging database issues directly slowed feature development. The team’s requirements for a replacement were clear: the new vector database had to eliminate persistent query failures, ingest and search tens of millions of vectors with low latency, and run with minimal operational intervention.

Selecting and deploying Qdrant

After evaluating alternatives, Fieldy selected Qdrant for its stability, straightforward configuration, and suitability for self-hosted deployment. They opted to run Qdrant in the same environment as their backend services, ensuring low-latency access and avoiding the cross-region connectivity issues that had contributed to failures in the previous architecture.

The migration process took less than a week. The team reused their existing vector schema to avoid redesigning their indexing logic during the transition. Embeddings, generated using Cohere’s multilingual v3 model, were batched locally before ingestion, replacing the integrated embedding calls previously handled within Weaviate. Following Qdrant best practices, they disabled indexing during bulk import to maximize throughput, re-enabling it only after the migration was complete. The backend API calls were updated to use Qdrant’s gRPC interface, and hybrid search with BM25 was combined with dense vector retrieval via Reciprocal Rank Fusion (RRF) for relevance scoring.

Architecture after migration

In Fieldy’s current architecture, the AI transcription device streams audio to the mobile app over Bluetooth. The app sends audio to the backend via HTTPS, where it is processed by a speech-to-text model to produce a transcript. This transcript and embeddings are stored in Qdrant.

When a user submits a query in the chat interface, the backend’s retrieval agent performs a hybrid search in Qdrant. The HNSW index is used for dense vector similarity, BM25 handles term matching, and results are merged with Reciprocal Rank Fusion. Conversation metadata is fetched from Firestore for context assembly before the results are returned to the user.

Results in production

Since the migration, Fieldy has delivered 100% reliable real-time AI recall and seamless memory search, ensuring the AI note taker’s performance matches its promise. Hosting Qdrant alongside the backend has reduced latency and virtually eliminated the network errors previously seen in cross-region deployments.

Fieldy also achieved a two-thirds reduction in infrastructure costs after moving to Qdrant, while scaling to handle tens of millions of embeddings without operational incidents. Post-migration interventions have been limited to planned storage increases.

Next steps for retrieval quality

With reliability and cost efficiency achieved, Fieldy’s engineering focus is shifting toward retrieval quality. Planned improvements include adding location filtering and datetime filtering within Qdrant to refine result sets, experimenting with late chunking strategies, and testing parallel hybrid searches to increase recall on complex multi-faceted queries. The team is also exploring embedding summaries alongside raw transcript segments to improve retrieval performance on high-level or thematic searches.