Chat with a codebase using Qdrant and N8N

Anush Shetty

·January 06, 2024

On this page:

n8n (pronounced n-eight-n) helps you connect any app with an API. You can then manipulate its data with little or no code. With the Qdrant node on n8n, you can build AI-powered workflows visually.

Let’s go through the process of building a workflow. We’ll build a chat with a codebase service.

Prerequisites

- A running Qdrant instance. If you need one, use our Quick start guide to set it up.

- An OpenAI API Key. Retrieve your key from the OpenAI API page for your account.

- A GitHub access token. If you need to generate one, start at the GitHub Personal access tokens page.

Building the App

Our workflow has two components. Refer to the n8n quick start guide to get acquainted with workflow semantics.

- A workflow to ingest a GitHub repository into Qdrant

- A workflow for a chat service with the ingested documents

Workflow 1: GitHub Repository Ingestion into Qdrant

For this workflow, we’ll use the following nodes:

Qdrant Vector Store - Insert: Configure with Qdrant credentials and a collection name. If the collection doesn’t exist, it’s automatically created with the appropriate configurations.

GitHub Document Loader: Configure the GitHub access token, repository name, and branch. In this example, we’ll use qdrant/demo-food-discovery@main.

Embeddings OpenAI: Configure with OpenAI credentials and the embedding model options. We use the text-embedding-ada-002 model.

Recursive Character Text Splitter: Configure the text splitter options. We use the defaults in this example.

Connect the workflow to a manual trigger. Click “Test Workflow” to run it. You should be able to see the progress in real-time as the data is fetched from GitHub, transformed into vectors and loaded into Qdrant.

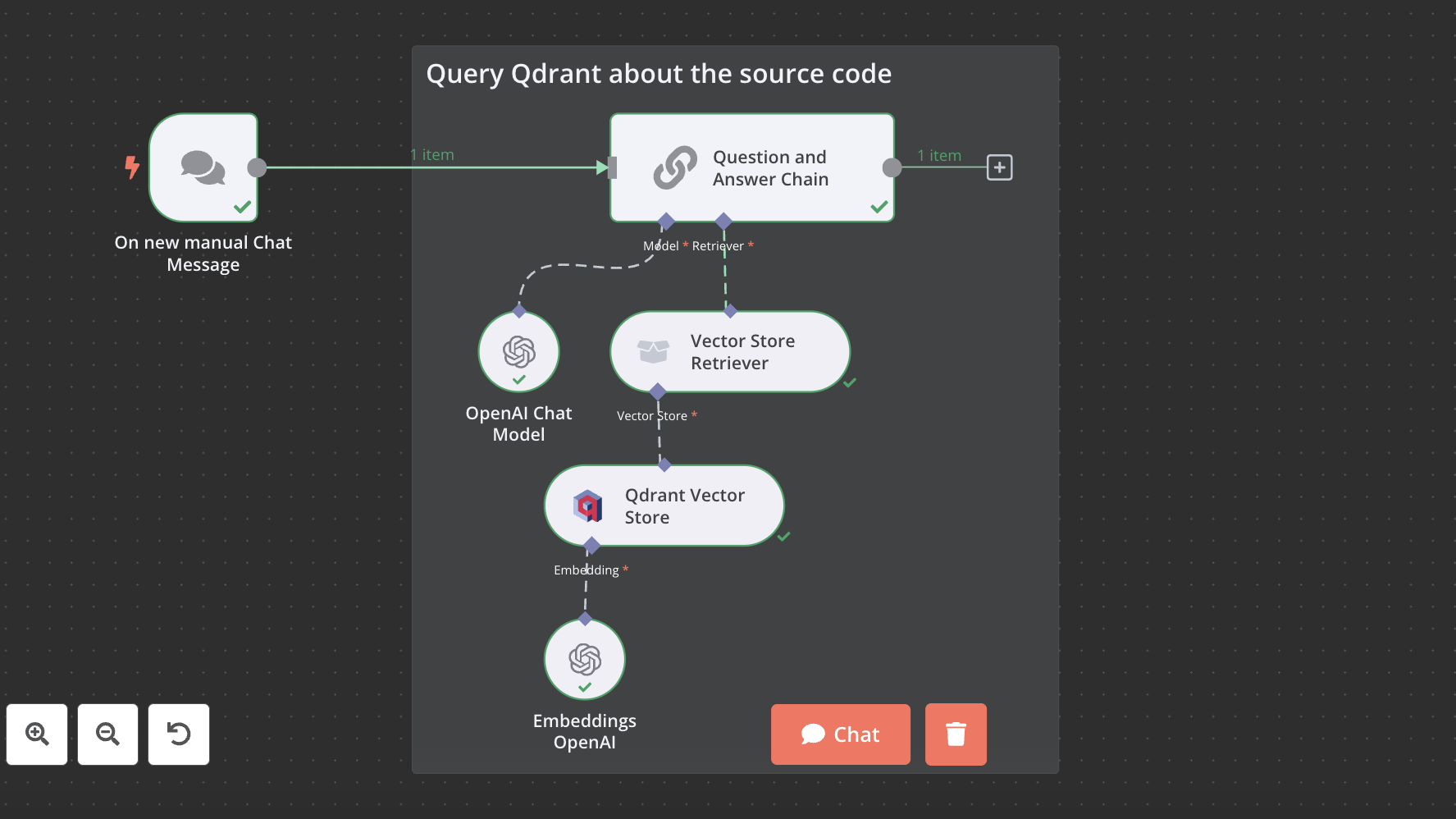

Workflow 2: Chat Service with Ingested Documents

The workflow use the following nodes:

Qdrant Vector Store - Retrieve: Configure with Qdrant credentials and the name of the collection the data was loaded into in workflow 1.

Retrieval Q&A Chain: Configure with default values.

Embeddings OpenAI: Configure with OpenAI credentials and the embedding model options. We use the text-embedding-ada-002 model.

OpenAI Chat Model: Configure with OpenAI credentials and the chat model name. We use gpt-3.5-turbo for the demo.

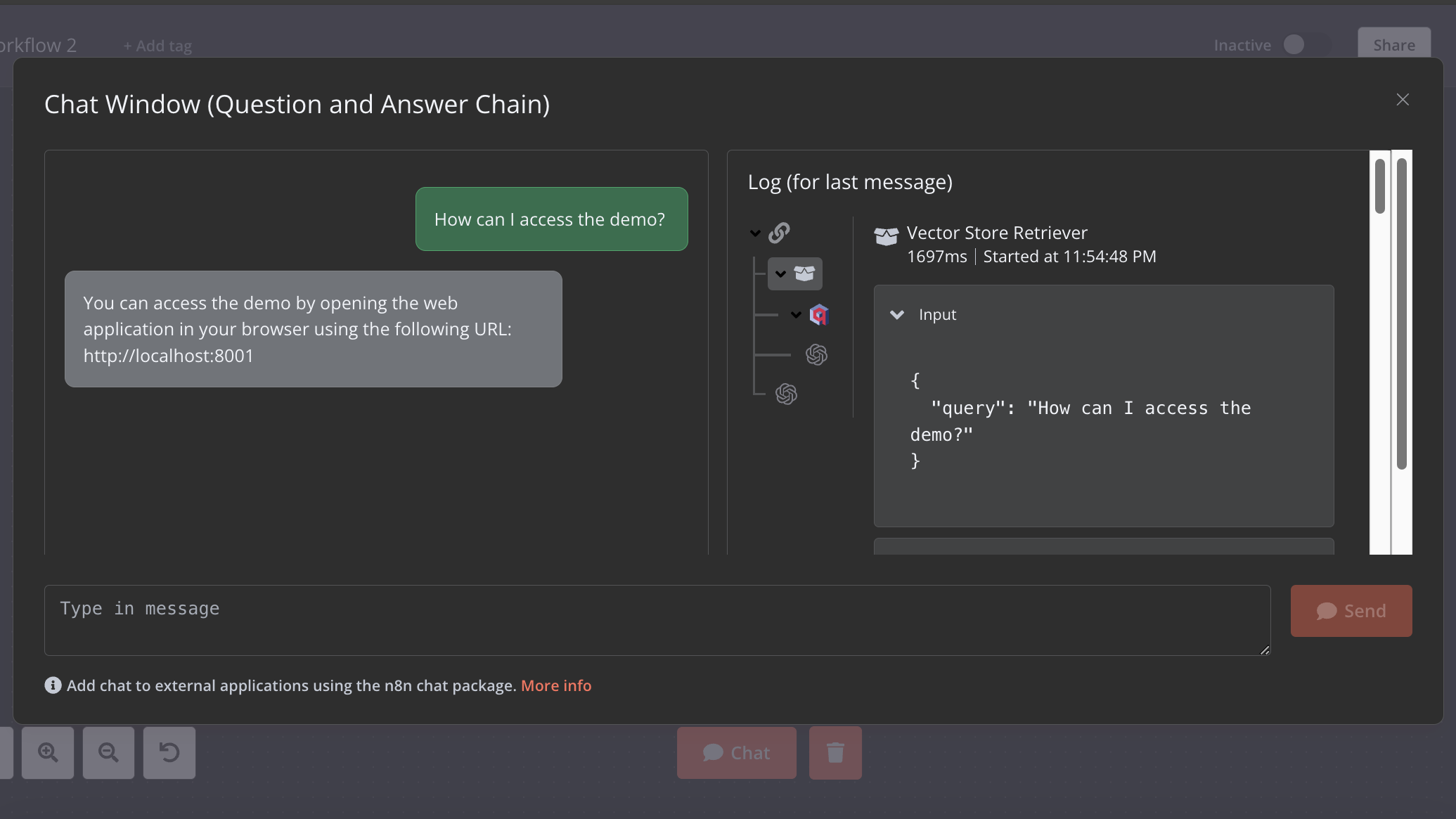

Once configured, hit the “Chat” button to initiate the chat interface and begin a conversation with your codebase.

To embed the chat in your applications, consider using the @n8n/chat package. Additionally, N8N supports scheduled workflows and can be triggered by events across various applications.