Building production vector search shouldn’t be so hard.

We asked our users building vector search why they moved to Qdrant from Elastic, Opensearch or another Lucene-based search engine. Here’s what they tell us:

Unacceptably slow queries with vector search

Endless indexing jobs

Overspending on over-provisioned memory

Brittle hybrid search

Lucene-based architectures just aren't built for scaling vector search.

Worried you'll collapse under real production load?

Qdrant is the AI retrieval engine built to handle dense, hybrid, and AI-native workloads at a size and performance that legacy systems were never built for.

No JVM garbage-collection pauses, no 3 a.m. reindex job.

Qdrant Can Optimize

Latency

Build lightning fast, massive AI applications on our Rust powered, native vector search engine.

Ingestion Speed

Qdrant ingests millions of vectors/minute while staying queryable.

Memory Provisioning

Stop over provisioning RAM to avoid failed index merges. Using Rust, Qdrant gives you more control over memory.

Scaling

Continue to meet your search KPIs at scale for speed, accuracy and cost with our vector-first features (e.g. native multi-vector).

Check Out Our Most Powerful Features

Why Teams Build Qdrant Alongside Elastic

You don't have to rip anything out.

Most teams start by adding Qdrant next to their existing stack to handle new dense or hybrid workloads … the ones Elastic can't keep up with.

Using Qdrant, Sprinklr achieved 90% faster write time, 80% faster latency, 2.5x higher RPS than with Elastic.

Ready to Build Vector Search the Right Way?

Move Beyond Elastic Without Rebuilding

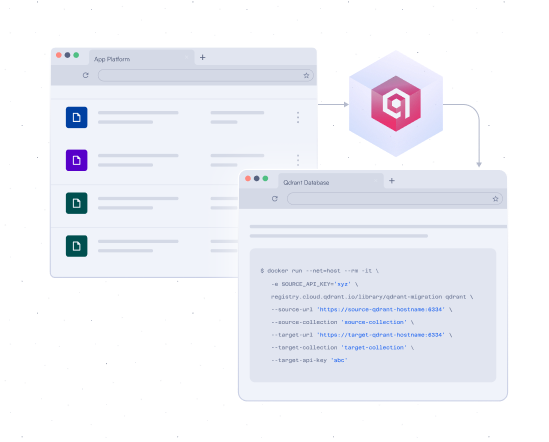

Seamlessly Bring Your Data to Qdrant with our Migration Tool.

Migrating from Elastic's Lucene-based stack doesn't have to mean starting over. Our Migration Tool lets you stream your existing vector data directly into Qdrant, live, fast, and with zero downtime. It works even while data is being inserted, supports reconfiguration, and eliminates the headaches of reindexing or overprovisioning.

Modernize your retrieval layer in hours, not weeks.

Migrate NowWe'll Help You Benchmark

If you have a production use case, run a side-by-side benchmark on your own index to measure latency, RAM footprint, and throughput before you decide.

Our team of Solution Architects will help you test feasibility, latency, and cost.

No strings attached, no commitment. Performance that speaks for itself.

Learn How To Use Top Features with

Hands-On Lessons

Out of the Box Hybrid Search

Meet every searcher's needs with hybrid search in Qdrant. Combine dense and sparse vectors, apply Reciprocal Rank Fusion (RRF), and build complex multi-stage pipelines, all in a single call with the Universal Query API.

Multivector Mastery

Qdrant supports token-level precision with multivector fields for ColBERT-style late interaction. Compare query and document tokens via MaxSim scoring for sharper relevance, ideal for complex text and visual documents with ColPali.

Build with Qdrant Cloud

Spin up a managed cluster in minutes, optimized for vector-heavy, real-time AI workloads. No more overprovisioning. No more reindexing. No more latency surprises.

Try Now